A few years ago, Davide Scaramuzza’s lab at the University of Zurich introduced us to the usefulness of a kind of dynamic vision sensor called an event camera. Event cameras are almost entirely unlike a normal sort of camera, but they’re ideal for small and fast moving robots when you care more about not running into things than you do about knowing exactly what those things are.

In a paper submitted to Robotics and Automation Letters, Antoni Rosinol Vidal, Henri Rebecq, Timo Horstschaefer, and Professor Scaramuzza present the very first time an event camera has been used to autonomously pilot a drone, and it promises to enable things that drones have never been able to do before.

The absolute cheapest way to get a drone to navigate autonomously is with a camera. At this point, cameras cost next to nothing, and if you fuse them with an IMU and don’t move very fast and the lighting is reliable, they can provide totally decent state estimation, which is very important.

State estimation, or understanding exactly where you are and what you’re doing, is a thing that sounds super boring but is absolutely critical for autonomous robots. In order for a robot to make decisions that involve interacting with its environment, it has to have a very good sense of its own location and how fast it’s moving and in what direction. There are many ways of tracking this, with the most accurate being beefy and expensive off-board motion capture systems. As you start trying to do state estimation with robots that are smaller and simpler, the problem gets more and more difficult, especially if you’re trying to deal with highly dynamic platforms, like fast-moving quadrotors.

This is why there’s such a temptation to rely on cameras, but cameras have plenty of issues of their own. The two most significant issues are that camera images tend to blur when the motion of the sensor exceeds what can be “frozen” by the camera’s frame rate, and that cameras (just like our eyes) are very Goldilocks-y about light: It has to be just right, not too little or too much, and you can forget about moving between the two extremes.

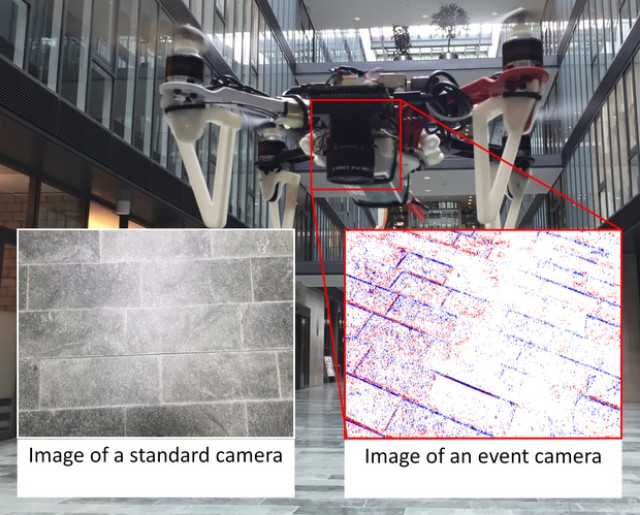

Event cameras are completely different. Instead of recording what a scene looks like, as a conventional camera would, event cameras instead record how a scene changes. Point an event camera at a scene that isn’t moving and it won’t show you anything at all. But as soon as the camera detects motion (pixel-level light changes, to be specific), it’ll show you just that motion on a per-pixel basis and at a very high (millisecond) refresh rate. If all you care about is avoiding things while moving, an event camera is perfect for you, and because they just look for pixel changes, they’re sensitive to very low light and don’t get blinded by bright light either.

At the University of Zurich, they’re using a prototype sensor called DAVIS that embeds an event camera inside of the pixel array of a standard camera, along with an IMU synchronized with both events and frames. With it, their quadrotor can autonomously fly itself, even under the kinds of lighting changes that would cause a regular camera to give up completely:

In this work, we present the first state estimation pipeline that leverages the complementary advantages of these two sensors by fusing in a tightly-coupled manner events, standard frames, and inertial measurements. We show that our hybrid pipeline leads to an accuracy improvement of 130% over event-only pipelines, and 85% over standard-frames only visual-inertial systems, while still being computationally tractable. Furthermore, we use our pipeline to demonstrate—to the best of our knowledge—the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual inertial odometry, such as low-light environments and high dynamic range scenes: we demonstrate how we can even fly in low light (such as after switching completely off the light in a room) or scenes characterized by a very high dynamic range (one side of the room highly illuminated and another side of the room dark).

In order to detect relative motion for accurate state estimation, the quadrotor’s cameras try to identify unique image features and track how those features move. As the video shows, the standard camera’s tracking fails when the lighting changes or when it’s too dark, while the event camera is completely unfazed. The researchers haven’t yet tested this outside (where rapid transitions between bright sunlight and dark shadow are particular challenges for robots), but based on what we’ve seen so far, it seems very promising and we’re excited to see what kinds of fancy new capabilities sensors like these will enable.

“Hybrid, Frame and Event-based Visual Inertial Odometry for Robust, Autonomous Navigation of Quadrotors,” by Antoni Rosinol Vidal, Henri Rebecq, Timo Horstschaefer, and Davide Scaramuzza from the University of Zurich and the Swiss research consortium NCCR Robotics, has been submitted to IEEE Robotics and Automation Letters.

Photos: University of Zurich

Source: IEEE